Stable Diffusion offers high-quality image generation, customization, and efficiency, making it ideal for creative and practical applications.

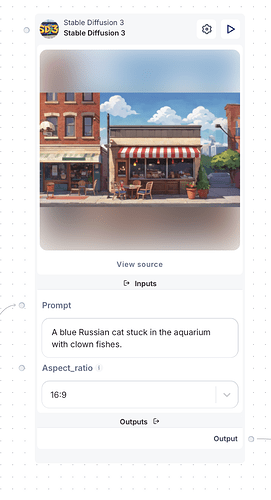

Prompt

The prompt is the most important parameter in Stable Diffusion. It’s a text description of the image you want to generate. The more detailed and specific your prompt, the better the results will be. For example, instead of just “a cat,” you could use “a fluffy orange tabby cat sitting on a windowsill, looking out at a sunny garden.”

Aspect ratio

This parameter determines the shape of your output image. Common aspect ratios include:

- 1:1 (square)

- 16:9 (widescreen)

- 4:5 (portrait)

- 3:2 (standard photo)

Choose the aspect ratio that best fits your intended use for the image.

CFG (Classifier Free Guidance) scale

CFG scale controls how closely the AI follows your prompt. It typically ranges from 1 to 30:

- Lower values (1-7): More creative, but may stray from the prompt

- Mid-range values (7-15): Good balance of creativity and prompt adherence

- Higher values (15-30): Strictly follows the prompt, but may result in less natural images

For most purposes, a CFG scale between 7 and 15 works well.

Image (for Image-to-Image generation)

For image-to-image generation, this is where you provide the input image that will be modified according to your prompt.

Prompt strength (for Image-to-Image generation)

This parameter, ranging from 0 to 1, determines how much the input image is changed:

- 0: No change to the input image

- 0.3-0.7: Moderate changes while preserving the original structure

- 1: Completely replaces the input image (similar to text-to-image generation)

Steps

This parameter determines how many iterations the AI goes through to create the image. More steps generally result in higher quality but take longer to generate:

- 20-30 steps: Quick generation, lower quality

- 30-50 steps: Good balance of speed and quality

- 50+ steps: Produces higher-quality images, but improvements slow down after about 100 steps.

Output format

This specifies the file format of the generated image:

- JPEG: Smaller file size, good for web use

- PNG: Lossless quality, better for editing

- WebP: Efficient modern format, smaller file sizes with good quality, supports transparency, ideal for web use

Output quality

For JPEG output, this determines the compression level. Higher quality means larger file sizes but better image fidelity.

Seed

The seed is a number that initializes the random noise used to start the image generation process. Using the same seed with the same prompt and settings will produce the same image. This is useful for:

- Reproducing specific results

- Tweaking other parameters while keeping the basic composition the same

Negative prompt

The negative prompt is used to specify what you don’t want in the image. It helps refine the output by telling the AI what to avoid. For instance, if you’re generating a landscape, you might use a negative prompt like “no people, no buildings, no text” to ensure a pure nature scene.

Private container

Refers to whether the generation result is stored in a private container, meaning only you or authorized users can access it. Setting this to true ensures that the generationы and results are stored privately and securely.

Remember, the key to getting good results with Stable Diffusion is experimentation. Try different combinations of these parameters to see what works best for your specific needs.

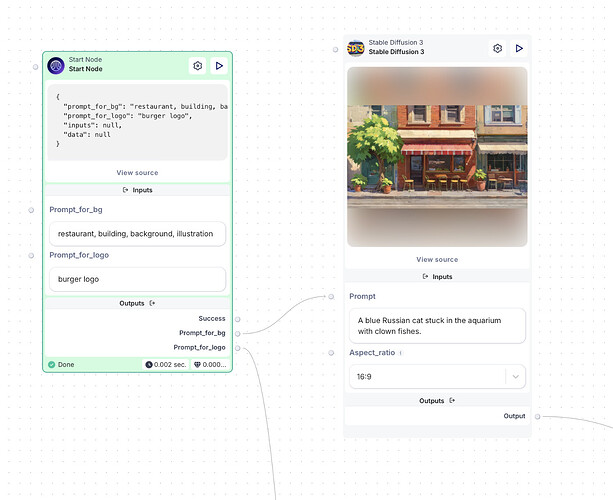

How to use on Scade:

Let’s explore how the model can be effectively used in tasks such as background generation.

We use Stable Diffusion, because it can quickly generate detailed, high-quality visuals based on user prompts. Whether it’s a simple gradient or a complex scene, SD ensures the background matches the desired theme and style, making it perfect for creating unique and professional designs.

This workflow for creating an image with a logo and background involves the following steps:

-

User Input: The user provides prompts describing the logo and background.

-

Logo Generation: The logo prompt is sent to DALL·E for creation, then processed through Rembg to remove background.

-

Background Generation: The background is generated using Stable Diffusion 3 (SD3) based on the user’s description.

-

Image Composition: The logo and background are combined using an Image Overlay Utility to create a cohesive final image.

-

Output: The completed image is delivered, ready for use, with options for refinement if needed.