Sometimes it’s necessary to enhance outcomes, but this can’t be done within a single prompt. Since O1 is still pretty expensive and not accessible to everyone, switching models isn’t an option either. In this case, interaction of multiple AIs might help. I suggest we consider different architectures for these interactions. Your comments and suggestions would be greatly appreciated.

- Planning and response

This approach is suitable for solving more complex problems. Planning also helps you write longer and better texts.

One neural network makes an action plan. The next AI performs the task based on the plan. The plan is transmitted as part of the system prompt.

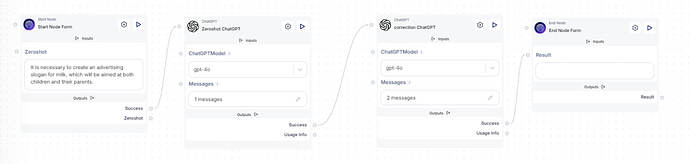

- Role and zeroshot

This approach can be useful when working with tasks that require professional skills and when you want to get a more detailed and in-depth answer.

The first AI formulates the role of which the second AI will be responsible. This role can be formulated as a description of a specialist with certain skills and knowledge. The second AI executes the initial user request from a previously created role.

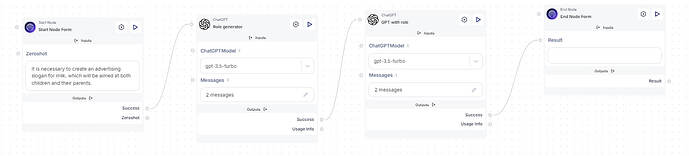

- Response, correction

One of the simplest, but still effective approaches.

The first AI executes a user request. The second AI makes adjustments to the response and corrects errors, if any.

- Response, criticism, correction

A more complicated version of the method with correction, it implies the presence of one additional step.

The first AI executes the user request without any additional manipulations. The second AI checks the answer and describes what can be corrected or how the result can be improved. The third AI rewrites the final answer, taking into account the criticism that was created at the last stage.

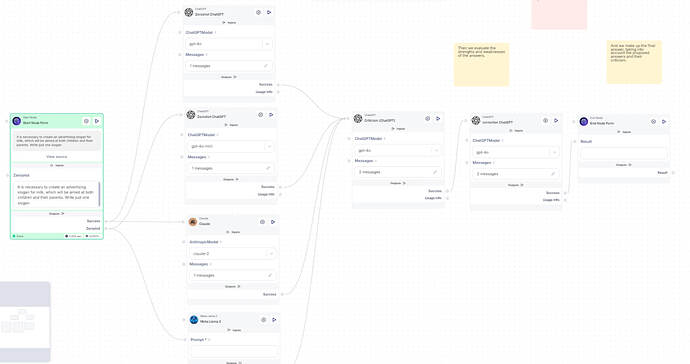

5 Different Answers, Criticism, Solution

We are sending the initial user prompt (zeroshot) to be executed by 4 different AI.

Then we evaluate the strengths and weaknesses of the answers. And we make up the final answer, taking into account the proposed answers and their criticism. In the last two stages, it is better to use the most “powerful” AI available.

Pretty interesting topic. Can you send a JSON so I can test it out?

Yeah, sure, sorry I didn’t send the link to the files right away. I’d really appreciate any feedback!

https://drive.google.com/drive/folders/1hY5IuFgs4uguMqWYr-C7tzkxQwblOeol?usp=drive_link

For starters, not too bad. The 5th example isn’t exactly cheap, a lot of nodes run, and it takes quite a bit of time. Especially Llama on Scade takes forever to process.

There’s also a weak spot in the 5th example, which is the “Main” AI that has to make the decision. An alternative to this architecture could be to have all AIs vote on which answer is the best and choose based on that. But honestly, that’s even slower and more expensive. ![]()

In articles, I’ve often seen examples of similar solutions, just in a loop. I think it’s worth starting with cyclical solutions like Answer - Answer Analysis - Correction - Answer Analysis 2 - Correction 2…

Thanks for the ideas! I’ll try to implement them soon.

Maybe just add a prompt generator as one of the steps and switch from zeroshot to using a prepared system prompt? ![]()