DALL·E is an AI model that generates detailed and realistic images from text descriptions, enabling users to visualize concepts and ideas creatively.

Prompt

Is a text description of the image you want to generate. It’s the most crucial parameter for DALL-E, as it guides the model in creating the desired image. For example: “A surreal landscape with floating islands and a purple sky”.

DALL-E model type

There are two main DALL-E models available:

- DALL-E 2: The earlier model with robust capabilities

- DALL-E 3: The newer model with improved image generation fidelity and detail

N (Number of images)

Specifies the number of images to generate:

- For DALL-E 3: Only 1 image can be generated per request

- For DALL-E 2: Up to 10 images can be generated in a single request

DALL-E quality type

Is only available for DALL-E 3 and has two options:

- Standard: The default option, generates images faster

- HD: Produces images with finer details and greater consistency across the image

DALL-E response format type

Determines the format of the generated image in the API response:

- URL: Returns a URL pointing to the generated image (default)

- b64_json: Returns the base64-encoded image data in JSON format

Size enum

Specifies the dimensions of the generated image:

- For DALL-E 3: Options are 1024x1024, 1024x1792, or 1792x1024

- For DALL-E 2: Options are 256x256, 512x512, or 1024x1024

DALL-E style type

Is only available for DALL-E 3 and has two options:

- Vivid: Generates hyper-real and dramatic images (default)

- Natural: Produces more subdued, realistic images

Image

Is used for image editing and variation tasks to provide the input image that will be modified or serve as a base for variations.

Mask

Used in image editing tasks to specify which parts of the input image should be modified. The non-transparent areas of the mask indicate the regions to be edited.

DALL-E operation type

Defines the type of operation to perform:

- create_image: Generates a new image based on a text prompt

- create_image_edit: Modifies an existing image based on a text prompt and a mask

- create_image_variation: Creates variations of an existing image

Remember that some of these parametres are specific to certain models or tasks. For example, the quality and style parameters are exclusive to DALL-E 3, while the mask parameter is only for image editing tasks.

How to use on Scade:

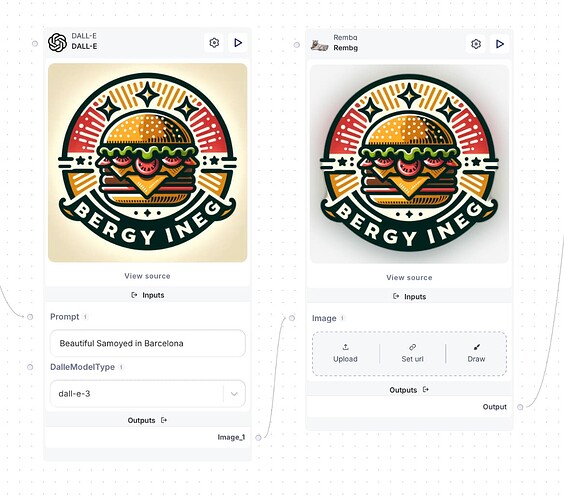

Case 1: Logo generation

Let’s explore how the model can be effectively used for tasks such as logo generation due to its ability to interpret creative prompts and produce visually impactful designs.

Dall-e stands out for its flexibility to create logos in various styles, ability to incorporate unique ideas, and speed in delivering multiple design options. This makes Dall-e ideal for generating high-quality, customized logos that align with user needs.

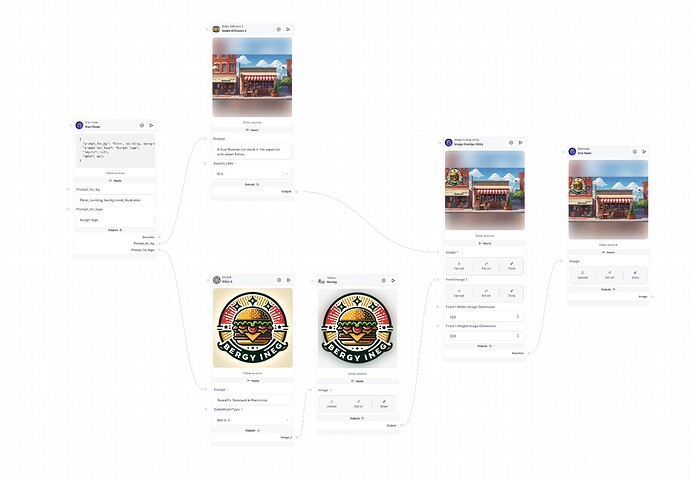

This workflow for creating an image with a logo and background involves the following steps:

-

User Input: The user provides prompts describing the logo and background.

-

Logo Generation: The logo prompt is sent to DALL·E for creation, then processed through Rembg to remove background.

-

Background Generation: The background is generated using Stable Diffusion 3 (SD3) based on the user’s description.

-

Image Composition: The logo and background are combined using an Image Overlay Utility to create a cohesive final image.

-

Output: The completed image is delivered, ready for use, with options for refinement if needed.

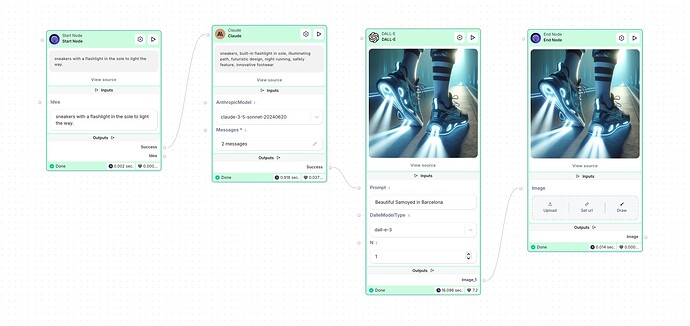

Case 2: Visual idea generator

This flow is designed to generate visual representations of product ideas.

Start Node: A textual description of a product idea, e.g., “sneakers with a flashlight in the sole to light the way.”

Prompt Generation (Claude Model): The description is processed by the Claude model to create a concise, well-structured prompt suitable for image generation.

Image Generation (DALL-E): The generated prompt is passed to DALL-E (version 3) to create an image.

End Node: The final generated image is collected and returned as the output.

Purpose:

The flow facilitates the rapid visualization of abstract product ideas by leveraging advanced AI models. It’s especially useful for:

-

Concept design.

-

Brainstorming and ideation.

-

Creating visual aids for presentations or pitches.

Additional Notes:

-

DALL-E settings allow for customization of quality, size, and style.

-

This flow integrates clear instructions and configurable parameters, ensuring flexibility for different use cases.